Vector embeddings are transforming the world of artificial intelligence (AI) and natural language processing (NLP) by converting complex data into numerical vectors. This SEO-optimized guide is designed to help beginners understand vector embeddings, explore their real-world applications, and learn why they are crucial for modern machine learning.

What Are Vector Embeddings?

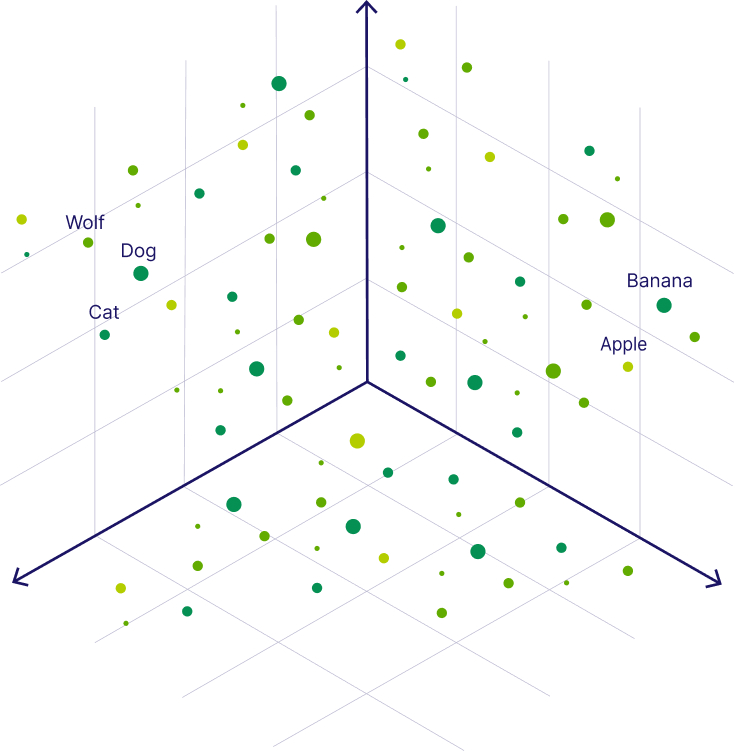

Vector embeddings represent data—such as words, images, or documents—as numeric vectors in a continuous space. By transforming raw, unstructured data into a format that algorithms can process, vector embeddings capture relationships and patterns that are essential for AI tasks. For example, in NLP, embeddings help demonstrate that the relationship between “king” and “queen” is similar to that between “man” and “woman.” This method of data representation is key for many machine learning models.

How Are Vector Embeddings Created?

Learning Through Neural Networks

Vector embeddings are typically generated using neural networks trained on large datasets. Techniques such as word2vec, GloVe, and BERT have revolutionized how computers understand language. These methods work by learning the context of words or features of data, and then representing them in lower-dimensional space. The process includes:

- Contextual Learning: In language models, each word is analyzed based on the surrounding words, enabling the model to capture semantic similarities.

- Dimensionality Reduction: High-dimensional data is reduced to a manageable number of dimensions (often between 50 and 300 for words) while preserving essential patterns.

Geometric Insights

In the vector space:

- Proximity: Similar data points are positioned closer together.

- Orientation: The direction of vectors can illustrate relationships, such as analogies between words.

- Clusters: Groups of similar items form clusters, simplifying the task of pattern recognition for machine learning algorithms.

Why Are Vector Embeddings Important?

Capturing Semantic Meaning

One of the greatest strengths of vector embeddings is their ability to capture semantic meaning:

- Natural Language Understanding: They enable AI systems to interpret the meaning of words, phrases, and sentences, which is critical for applications like translation, sentiment analysis, and chatbots.

- Image Analysis: In computer vision, embeddings help in classifying and retrieving images by encoding visual features.

Enhancing Machine Learning Models

Vector embeddings offer several benefits for modern AI and machine learning:

- Efficient Data Search: By converting data into vectors, algorithms can quickly compute similarities using metrics such as cosine similarity.

- Improved Clustering: Embeddings allow for effective grouping of similar data points.

- Simplified Data Representation: High-dimensional data is reduced, making it easier to manage and analyze without sacrificing important details.

Real-World Applications of Vector Embeddings

Natural Language Processing (NLP)

- Chatbots and Virtual Assistants: Vector embeddings enable these systems to understand and respond to user queries with greater accuracy.

- Content Recommendation: They help search engines and recommendation systems deliver relevant results based on semantic similarity.

Recommendation Systems

- E-commerce: Embeddings are used to analyze user behavior and product features, leading to more personalized recommendations.

- Streaming Services: Platforms like music and video streaming services use embeddings to suggest content that matches user preferences.

Computer Vision

- Facial Recognition: By representing faces as vectors, systems can efficiently match and verify identities.

- Image Search: Vector embeddings facilitate the search for similar images, enhancing the user experience on visual platforms.

Challenges and Considerations

Despite their powerful capabilities, vector embeddings come with some challenges:

- Computational Complexity: Managing high-dimensional vectors can be resource-intensive, especially for large datasets.

- Bias in Data: Since embeddings are trained on real-world data, they may inherit and even amplify biases present in the training material.

- Interpretability: Understanding the inner workings of models that use vector embeddings can be difficult, which sometimes makes it challenging to explain model decisions.

The Future of Vector Embeddings

The field of vector embeddings is evolving rapidly. With advancements in contextual embeddings (such as those in BERT and GPT models), AI systems are becoming increasingly adept at understanding complex patterns in data. As research continues, we can expect even more accurate models that better capture nuance and context, benefiting a wide range of applications from language translation to advanced image recognition.

Conclusion

Vector embeddings are a fundamental component of modern AI, transforming how machines understand and process complex data. This beginner-friendly guide has outlined what vector embeddings are, how they are created, and why they are essential in fields like NLP, computer vision, and recommendation systems. As the technology evolves, the role of vector embeddings will only grow, opening up new possibilities in machine learning and beyond.

For anyone looking to dive into AI and machine learning, understanding vector embeddings is an essential first step. Explore further, experiment with these concepts, and stay updated with the latest advancements in this exciting field!